Designing a VR Experience to Create Empathy for Bats

Since the COVID-19 pandemic, there has been a rise in attempts to eradicate bat populations, driven by unfounded fears about disease transmission.

According to AustinBats.org,

"Bats are America's most rapidly declining and threatened warm-blooded animals. Alarming losses of free-tailed bats have been reported though their population status is inadequately monitored. Even the Congress Ave. Bridge bats appear to be in decline."

So, this project was inspired by the need to raise empathy for bats and their environmental importance. My team and I were also based in Austin, dubbed as the "City of Bats" as a reference to the 1.5 million winged creatures living near the Congress Avenue Bridge.

I worked as the Lead UX Researcher on this project alongside Ishita, Amanda, Fanyi and Soojin.

Research phase:

• Academic literature review

• User journey maps

• Usability testing

• Data analysis

Design phase:

• Storyboards

• UX writing

• Wireframing

• Prototyping

Canva, Unity, Figma, Qualtrics

Fall 2022 (8 weeks - October to December)

• Secondary research to understand bats' contributions to our ecological balance

• Examining attitudes towards bats

• Exploring storytelling avenues in VR

• Storyboarding to clarify different aspects of the experience

• Designing a mid-fi prototype in Unity3D

• Creating hi-fi prototype to test with users

• Designing a questionnaire to capture thoughts and feedback

• Inviting peers to test the experience

Since our project involved information architecture that needed to be tested for an exhibit, we employed the agile design methodology. This iterative process helped us seamlessly combine the multiple layers (the content research, the application and the 3D rendering of the space) crucial for the objectives we set out during ideation.

.png)

Click on the ethereal gaming world below to play my VR game.

To better understand the problem space, I researched the environmental role of bats and reviewed studies on how virtual environments can be designed to combat fears related to animals.

They save farmers a billion dollars annually in avoided pesticide use by intercepting migrating pests, and reducing egg-laying on crops. They're responsible for pollination and seed dispersal, such as the blue agave.

Misconceptions about bats are abundant. Bats are not more contaminated than other wild animals. However, unfounded speculations combined with misleading research only strengthen the stigma against them.

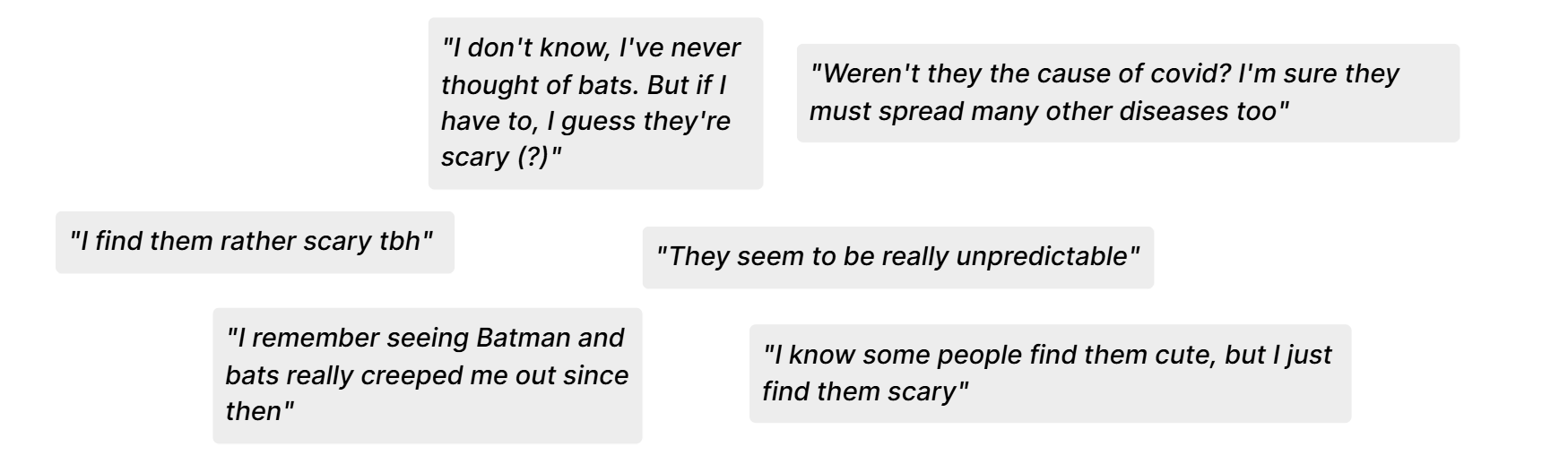

I surveyed my classmates to understand common perceptions of bats and to clarify our target audience.

Here are real quotes from Austinites:

The pain points made it clear that a lot of the stigma surrounding bats was about how scary they are. It is difficult to empathize with a creature that one is scared of. That became our guiding principle.

Our initial idea centered on a "Day in the Life" narrative. The experience would take the user through multiple activities that bats engage in, making them aware of their importance and the perils they face. However, further brainstorming sessions made us realize that a gamified experience would be better and we spent time exploring this further.

When participants embody the avatar of a bat or watch a 360-degree documentary about bats, they are more likely to empathize with the species. Empathizing with other animals allows us to deconstruct the anthropocentric viewpoint we have all learned growing up. This formed the backbone of our idea.

When participants embody virtual avatars radically different from their own bodies, they report strong feelings of immersion, presence, and ownership over their virtual bodies. Strong effects of bodily ownership can cause profound changes in the users’ emotions and behavior.

If a user has the avatar of an animal, they will attempt to think and act like that animal thus developing empathy. VR games have been used in the past to create empathy for others. Thus, there is an immense possibility for increased empathy for the avatar inhabited.

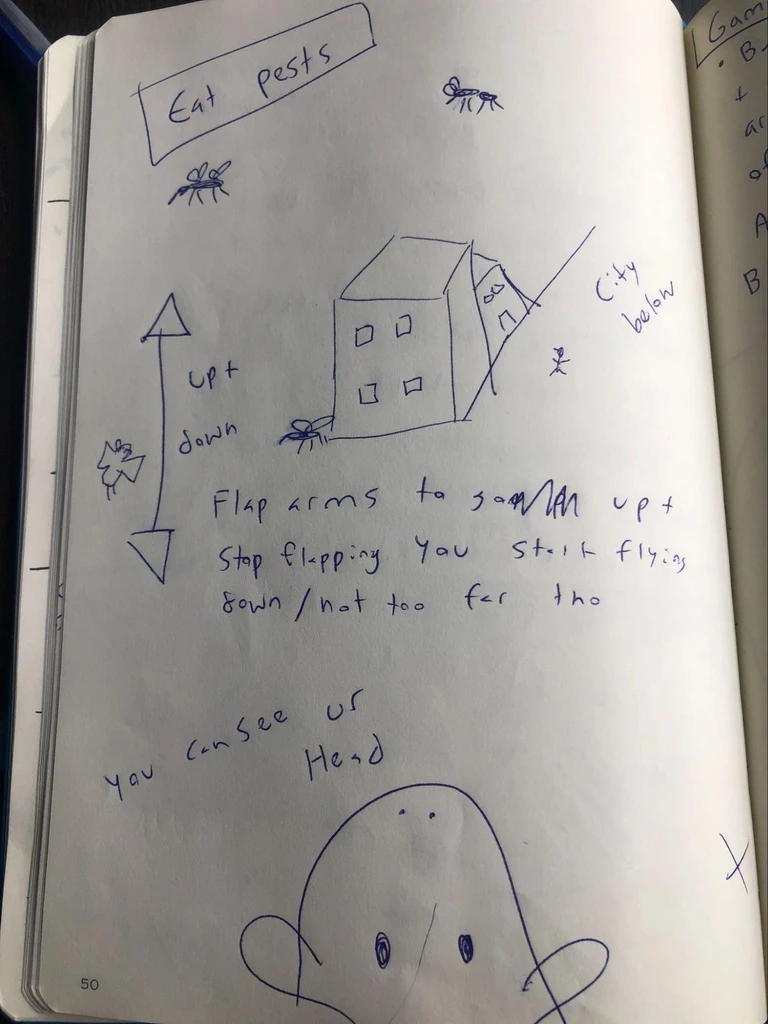

The team settled on a game idea where the objective would be to capture as many mosquitoes as one can while embodying a bat. The bat would capture mosquitoes placed around its path and help the humans in this way.

I decided to use the Meta Quest 2 because it is user-friendly, affordable and widely available.

Unlike the Rift, it doesn’t tether the user with a wire and also offers accurate motion tracking and a higher refresh rate, enhancing immersion while minimizing motion sickness.

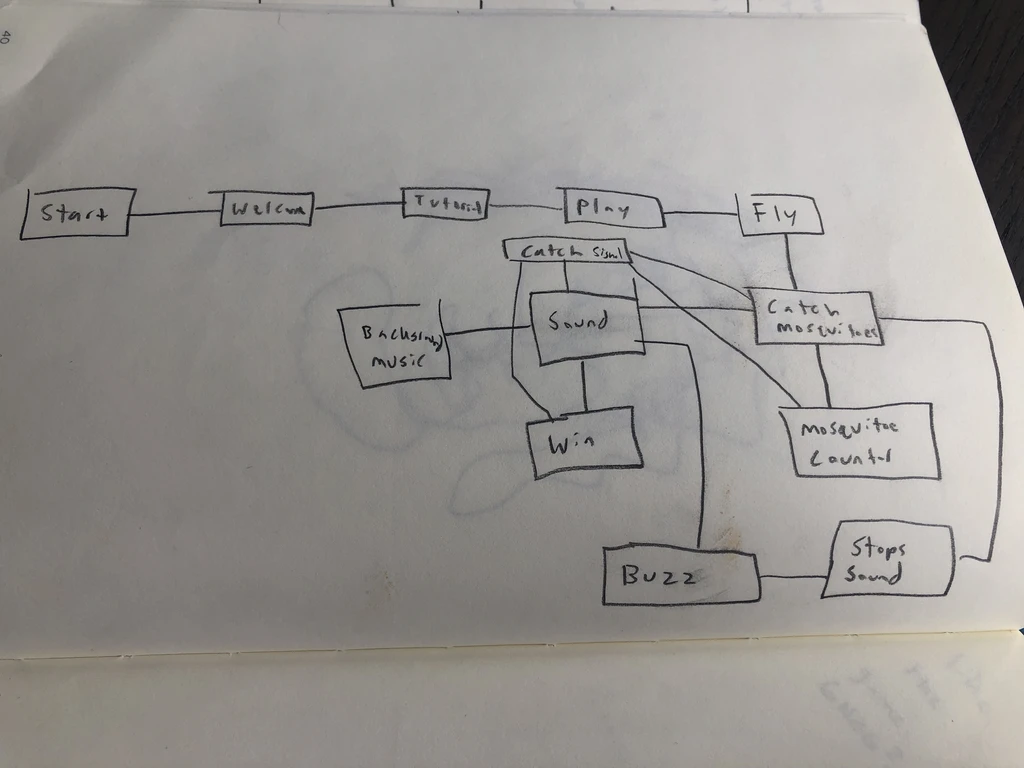

Figure 1- The game flow and mechanics of the avatar

An important point of contention was how the user would embody the agent. Our secondary research suggested that first-person embodiment results in the highest levels of IVBO (Illusion of Virtual Body Ownership), but this would not give us the desired results in terms of empathizing with the avatar, as the user can't see it.

Another approach would be having the user follow the avatar in a third-person POV. This would result in increased empathy, but lessened IVBO.

We decided to come up with a hybrid POV (point-of-view) approach. The camera would be positioned slightly above the bat, allowing for the user to see the bat as it moves around, but not be too divorced from the game as a third-person POV.

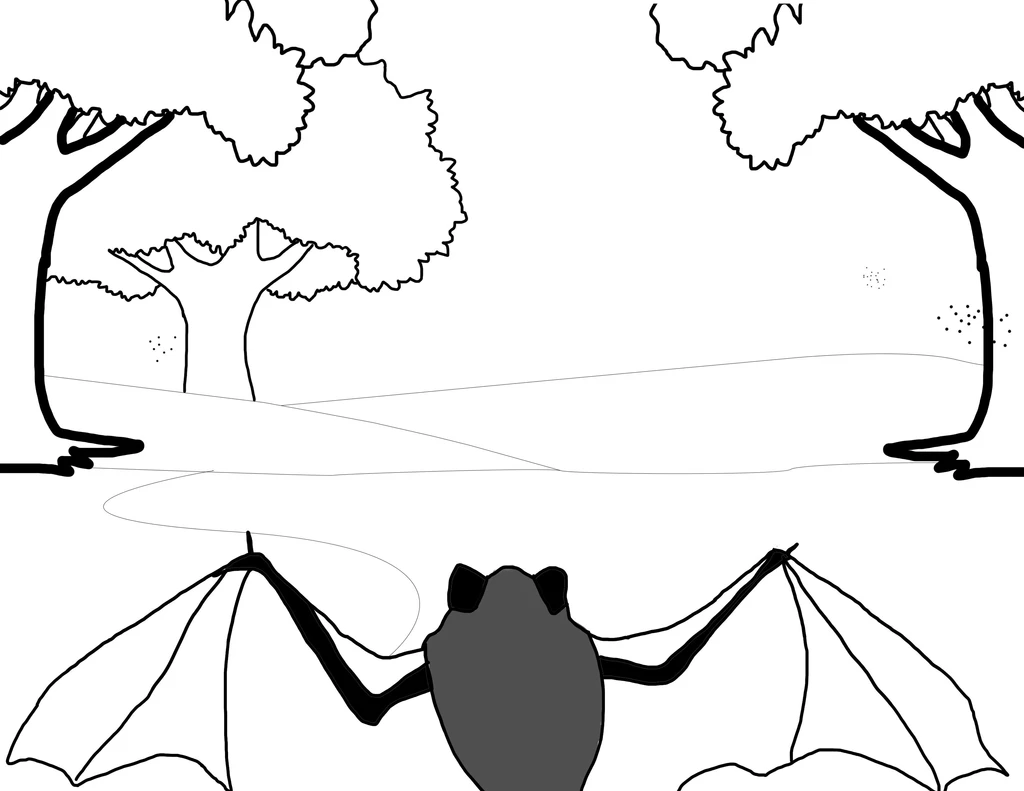

The mid-fi prototype featured an urban forest with a freely moving bat character. It also featured mosquitos that could be 'caught' by pressing a button on the Oculus controller. However, it presented the risk of simulator sickness due to the height of the bat asset and the unobstructed pathway.

By imagining our intended audiences, we adapted our product to suit how the physical space, the application and interpersonal would impact user experience.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

The initial wireframes drawn on paper for understanding the flow of the game and the features and characters we wanted to add to it. Some ideas included pleasant background music to set the mood and a 'snap' sound and haptic feedback on successfully catching mosquitoes.

An important point of contention was how the user would embody the agent. Our secondary research suggested that first-person embodiment results in the highest levels of IVBO (Illusion of Virtual Body Ownership), but this would not give us the desired results in terms of empathizing with the avatar, as the user can't see it. Another approach would be having the user follow the avatar in a third-person POV. This would result in increased empathy, but lessened IVBO.

We decided to come up with a hybrid POV (point-of-view) approach. The camera would be positioned slightly above the bat, allowing for the user to see the bat as it moves around, but not be too divorced from the game as a third-person POV.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

For the physical space, I researched Islamic architecture to inform the aesthetic of our imagined physical space. Based on my content research, my team calculated the footfall of the Austin Public Library and sketched out rooms with various ways of organizing information. These sketches were later visualized through AutoCAD.

.png)

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

A VR showcase was held at the University of Texas at Austin where students and faculty could try out different projects.

The research questions that guided my user testing were based on the literature review conducted earlier.

I created a pre- testing and a post-testing questionnaire to understand the following:

How does immersion impact empathy-building in users?

Users were asked to rate feelings of self presence, spatial presence and empathy on a Likert scale of 1 to 5.

A total of 22 people took the survey.

The game boosted empathy and improved attitudes toward bats in 10 people (48%) participants:

3 became more positive than positive,

3 became more positive than neutral, and

3 became more positive than negative.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

"I have been unsure about the utility of VR for dealing with social issues. However, this game has given me a more moderated perspective. I still think that the VR hardware itself can be made more accessible".

"I am still a bit scared of bats but I now realize that they’re just like any other animal".

The game doesn’t test whether the user has understood the controls.

Action #1: Create a help directory that remains accessible through the main menu and throughout the game.

Action #2: Add a tutorial that verifies whether the user has understood the controls. This tutorial should be optional

.

The game can be further expanded to accommodate intended storylines and actionable advice.

Action #1: Add more levels, such as the Texas Freeze, to add to the replayability and complexity of the game.

Action #2: Add resources on bat conservation efforts to contribute in whatever way possible

The game is not the most accessible to people with disabilities and people from other linguistic backgrounds

Action #1: Allow transition to voice input

Action #2: Give the option to choose other languages to play in.

Action #3: Add audio directions for users who cannot read.

On the basis of lo-fi feedback, we created an updated mid-fi Figma prototype:

We conducted mid-fi testing right after spring break to further develop our phone app and to understand user behaviors at in-person exhibits

Nomenclature: The names of several pages confused users, e.g. Art, Explore, My Library - it was challenging for users to complete information-seeking tasks on the first pass when they were unsure what they would find on these pages.

Navigability: The hamburger menu needed to be workshopped to include, exclude, and reorder functions. Additionally, it was challenging for users to locate the Princess Badr exhibit because they weren’t sure where to navigate- e.g. Exhibits, My Events, My Master Library.

Core Functionality: Some users weren’t sure they grasped the main purpose of the app. An e-reader? A booking site? Additional information? The AR game? We decided to focus less on booking and e-reading, and more on providing additional information + the AR game.

We set up our simulated environment in the PCL grad student lounge.